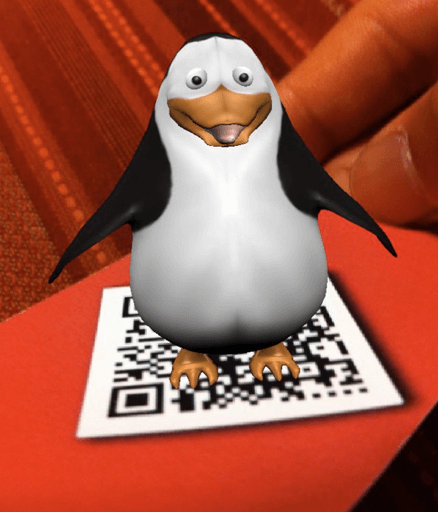

E-Commerce using Augmented Reality

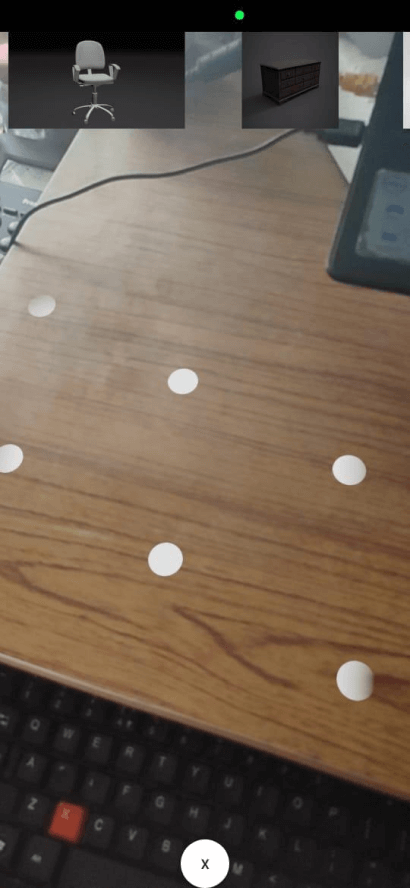

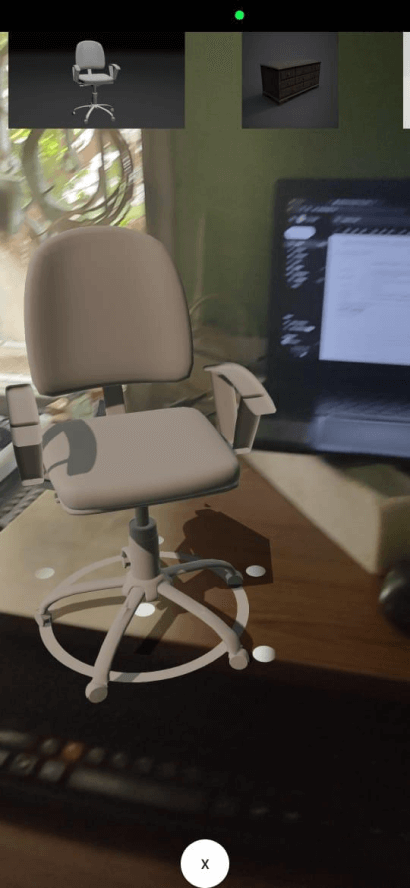

For our final year project we built an augmented reality mobile application that superimposed furniture on the detected plane.

MY ROLE

Team Lead

Developer

TIMELINE

NOV - FEB 2020

TOOLS

Android Studio

TEAM

Julian Samuel

Kaushik H

Harvish Kant

Sherine Glory